Deploying containerized apps on AWS? In this article, I am presenting my view on how you can choose between EKS vs. ECS on Amazon Cloud for deploying your workloads.

A little foreword

In order to bridge the gap of delivering products quickly, all organisations are shifting the base architectural design patterns to distributed system architecture. It’s kind of common practice to either bootstrap or transition the existing applications to containerized or server-less architectures.

Managing clustered applications can soon become an overhead when the organisation scale and hence increases a dire need for a platform which can support automation of all the tasks related to management, deployment, scaling of these clusters.

Some of the well-known players are Kubernetes a.k.a K8, AKS, EKS, ECS, IBM Cloud Kubernetes, Azure Service Fabric, Helios, and Docker Swarm.

Kubernetes is still the most preferred choice among the organisations and its adoption is largely increasing in container orchestration.

___________________________________________________________________

ECS: ECS stands for AWS Elastic Container Service. It’s a scale-able container orchestration platform owned by AWS. It was designed to run, stop, and manage containers in a cluster. The containers themselves are defined here as part of task definitions and driven by ECS in the cloud.

Apart from this, you have an option to deploy Amazon ECS on AWS Outposts. I will cover this in another article later.

Keep reading below to learn more about both the deployment options and when to use which one.

EKS: Amazon EKS is a managed service that makes it easy for you to run Kubernetes on AWS without needing to install and operate your own Kubernetes control plane or worker nodes. You have no access to the master nodes on EKS since they’re managed under the hood.

To run a Kubernetes workload, EKS establishes the control plane and Kubernetes API in your managed AWS infrastructure and you’re good to go. At this point, you can deploy workloads using native K8s tools like kubectl, kops, Kubernetes Dashboard, Helm, Packer, Terraform and Terragrunt.

Let’s look at the similarities between ECS and EKS before we can dig deeper into the real value adds and how you can identify which one is right for you.

- Layer of Abstraction — they both come with their own levels of abstractions called deployments (EKS) and tasks (ECS).

- Mix of AWS Compute Resources– they both allow to use a mix of compute infrastructure based on the availability like AWS Fargate, AWS Outpost, AWS EC2, AWS LocalZone, AWS Wavelength

- No Monitoring or Operational Overhead — You don’t need to monitor or operate them. However, you still need to define healthchecks and monitoring for your applications which are served.

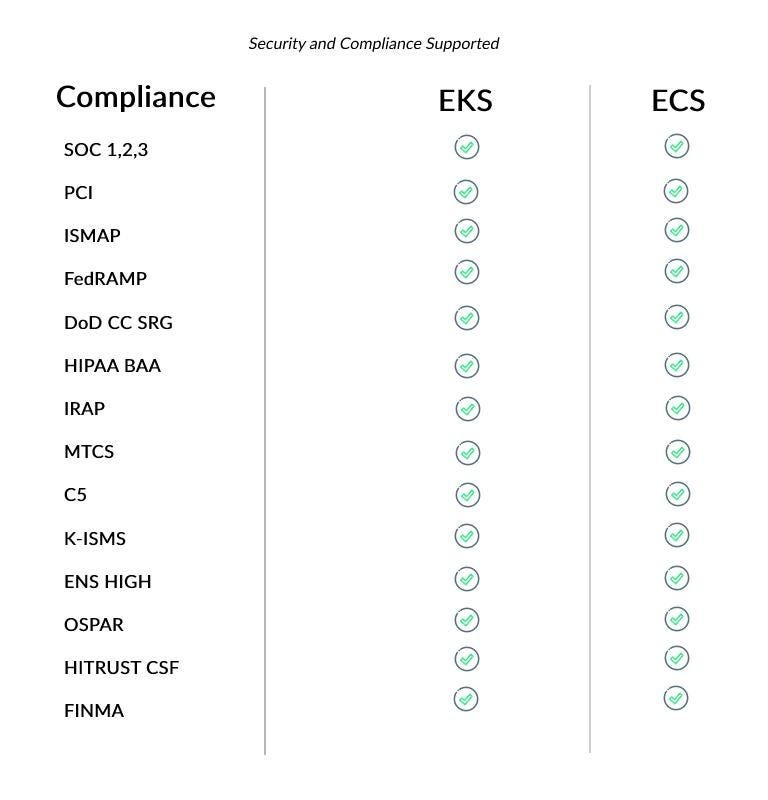

- Shared Security Model — they both operate within the realm of AWS IAM solution (Identity & Access Management) so that you can have full control and limit over who can access ECS tasks or Kubernetes workloads.

Read on to find out which one can be a good choice for your organisation and you can use a few best practices to reduce your cloud bill.

How do you Choose the Best Platforms for your Deployments?

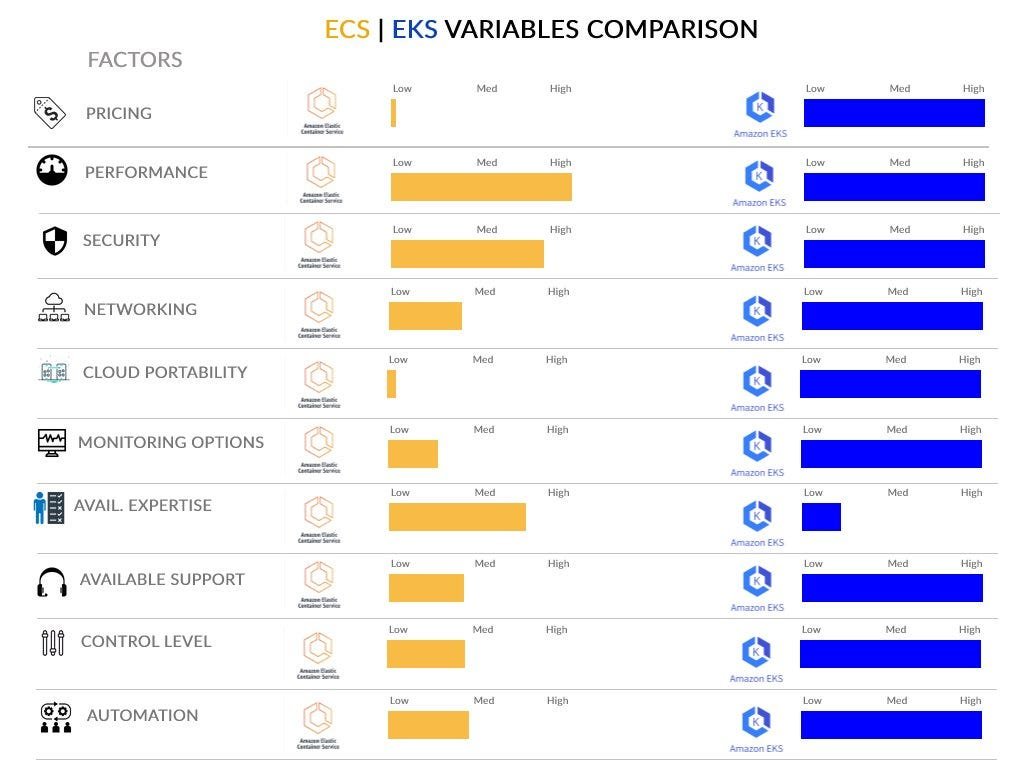

Summarizing in short. Read the full article on the explanation of the below factors.

There are key 3 factors which you shall evaluate while determining the platform of your choice.

- Performance

- Cost

- Availability & Reliability

Understanding the difference is essential as they establish the baseline for your financial expenditure and operational complexity.

As of now lets look at how ECS and EKS can create a difference while considering these factors and then we are going to look at the pros and cons of each deployment option.

1. Performance

ECS and EKS both use the underlying infrastructure of VM’s. The choice of compute resources will define the overall performance of the applications however from the viewpoint of the performance of the underlying infrastructure both of them have SLA’s above 90%.

2. Cost

ECS — There is no additional charge for Amazon ECS. You pay for AWS resources (e.g. EC2 instances or EBS volumes, VPC costs) you create to store and run your application. You only pay for what you use, as you use it; there are no minimum fees and no upfront commitments.

EKS– You pay $0.10 per hour for each Amazon EKS cluster that you create and for the AWS resources (e.g. EC2 instances or EBS volumes) you create to run your Kubernetes worker nodes. You only pay for what you use, as you use it; there are no minimum fees and no upfront commitments.

For a single cluster running for the following workload amounts to

1 Clusters x 0.10 USD per hour x 730 hours per month = 73.00 USD

This cost is over and above the additional cost of AWS resources (e.g. EC2 instances or EBS volumes, VPC etc.), this is what you pay for the service itself.

If you run ECS or EKS on AWS Fargate or use AWS Outpost then the same will amplify. However, you can calculate estimates using the AWS Pricing Calculator.

3. Availability and Reliability

It will completely depend on what services are you planning to provide using the platform. Services that require high availability need some amount of redundancy. Services typically run at some optimal utilization in steady-state, which includes headroom to absorb burst, and for highly available services this headroom also needs to factor in absorbing fail overload.

Deploying your application across 3 availability zones is a preferred choice for highly available business critical applications, however Availability Zones is a cost vs availability trade-off.

Both ECS and EKS provides highly available capabilities, but when it comes to scalability EKS has the native support of K8’s feature set.

For ECS

A three Availability Zone spread of EC2 instances in your Cluster delivers a good balance of availability and cost by reducing the steady-state utilization to headroom ratio while meeting the availability goal. You can also use and leverage the T3 platforms burst capabilities to absorb some portion of potential failover load, supported by an EC2 Auto Scaling group, which will ensure that lost capacity is replaced and this can be a very compelling cost-saving mechanism.

For EKS

Amazon EKS runs and scales the Kubernetes control plane across multiple AWS Availability Zones to ensure high availability. Amazon EKS automatically scales control plane instances based on load, detects and replaces unhealthy control plane instances, and it provides automated version updates and patching for them. Because of this, Amazon EKS is able to offer a great SLA for API server endpoint availability.

This control plane consists of at least two API server instances and three etcd instances that run across three Availability Zones within a Region.

This is a preferred choice as you are not managing the underlying infrastructure.

___________________________________________________________________

Now let’s have a look at the Pros and Cons of these services and when to use which one.

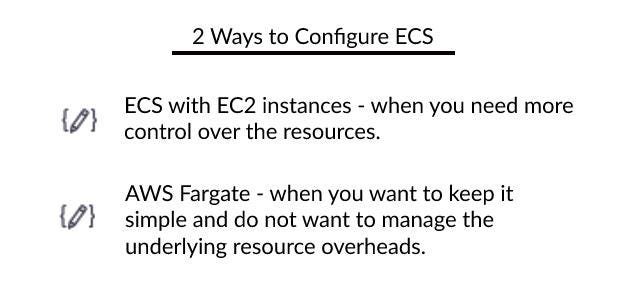

ECS — Elastic Container Services With EC2 Cluster

In this model, containers are deployed to EC2 instances (VMs) created for the cluster. ECS manages them together with tasks that are part of the task definition.

ECS — ECS with AWS Fargate

In this model, you don’t need to worry about EC2 instances or servers anymore. Just choose the CPU and memory combo you need and your containers will be deployed there.

Pros:

- No servers to manage when you go for ECS + Fargate model.

- AWS is in charge of container availability and scalability. Still, better select the right CPU and memory — otherwise, you risk that your application becomes unavailable.

- ECS doesn’t come with any additional charges. When cost is a primary concern.

- You have full control over the type of EC2 instance used when you go for EC2 Clusters for ECS deployments. For example, you can use a GPU-optimized instance type if you need to run training for a machine learning model that comes with unique GPU requirements.

- You can take advantage of Spot instances that reduce cloud costs by up to 90% when you choose ECS on EC2 clusters.

- You can use Fargate Spot, a new capability that can run interruption-tolerant ECS Tasks at up to a 70% discount off the Fargate price.

Cons:

- ECS + Fargate supports only one networking mode — awsvpc — which limits your control over the networking layer. This also limits your ability to not use the same ENI for deploying more pods to the same instance.

- You’re the one responsible for security patches and network security of the instances, as well as their scalability in the cluster (but thankfully, you can use Kubernetes autoscaling for that) in case if you choose ECS on EC2 Clusters.

- ECS comes with a set of default tools. For example, you can use only Web Console, CLI, and SDKs for management. Logging and performance monitoring is carried out using CloudWatch, service discovery through Route 53, and deployments via ECS itself.

- You get no control plane, so once your cluster is set up, you can configure and deploy tasks directly from the AWS management console.

- ECS is an AWS proprietary technology and hence no portability to other cloud providers.

- If you build an application in ECS, you’re likely to encounter a vendor lock-in issue in the long run.

- Amazon ECS tasks hosted on Amazon EC2 instances is dependent on the network mode defined in the task definition and hence is limited to either use :

awsvpc— The task is allocated its own elastic network interface (ENI) and a primary private IPv4 address. This gives the task the same networking properties as Amazon EC2 instances.bridge— The task utilizes Docker’s built-in virtual network which runs inside each Amazon EC2 instance hosting the task.host— The task bypasses Docker’s built-in virtual network and maps container ports directly to the ENI of the Amazon EC2 instance hosting the task. As a result, you can’t run multiple instantiations of the same task on a single Amazon EC2 instance when port mappings are used.none— The task has no external network connectivity.

8. Limited support options, offers limited community assistance, as it is an AWS proprietary tech, so you become more dependent on AWS support.

When to use:

Some teams might benefit from ECS more than EKS thanks to its simplicity and is a good starting point for containerization transition journey. You shall use ECS

- You have limited devops resources.

- When you don’t have time or resources to pick and choose add-ons: ECS could be the first step. It allows you to try your hand at containerization and move your workloads into a managed service without huge upfront investment.

- ECS proves to be too simple but comes with limitations.

- If you’re just exploring microservices or containers, ECS is a better option.

- It’s not a good fit for highly regulated environments where companies use dedicated tenancy hosting.

- When you want to deploy workloads which are designed to be managed by a simple API for creating containerized workloads without any complex abstractions.

___________________________________________________________________

EKS (Elastic Kubernetes Service)

Pros:

- You don’t have to install, operate, and maintain your own Kubernetes control plane.

- EKS allows you to easily run tooling and plugins developed by the Kubernetes open-source community.

- EKS automates load distribution and parallel processing better than any DevOps engineer could.

- Your Kubernetes assets integrate seamlessly with AWS services if you use EKS.

- EKS uses VPC networking.

- With EKS, you can assign a dedicated network interface to a pod to improve security. All the containers inside that pod will share the internal network and public IP.

- You can share an ENI between multiple pods, which allows you to place more pods per instance.

- Any application running on EKS is compatible with one running in your existing Kubernetes environment.

- Kubernetes in EKS allows you to package your containers and move them to another platform quickly.

- The ideal scenario is when you can move your workloads from one cloud provider to another with minimal disruption. EKS fully supports cloud portability.

- Extensive documentation and support is available beyond AWS support, due to open source and relatively very active community contribution and publicly available resources (videos, official trainings, online courses, Helm Charts, Kubernetes Operators)

Cons:

- EKS will charge you $0.1 per hour per Kubernetes cluster additionally beyond the charges for other resources; it is not as free as ECS.

- Deploying clusters on EKS is a bit more complex and requires expert configuration experience with Kubernetes, as you need to configure and deploy pods via Kubernetes.

- Need more expertise and operational knowledge to deploy and manage applications on EKS when compared to ECS.

When to use:

- If you have the right experience and operational resources and time to delve into using Kubernetes as part of your core architectural design.

- When you need granular control over container placement: ECS doesn’t have a concept similar to pods. So, if you need fine-grained control over container placement better move to EKS.

- When you need more networking modes: ECS has only one networking mode available in Fargate. If your serverless app needs something else, EKS is a better choice.

- If you’d like to have the freedom to integrate with the open-source Kubernetes community or keep your apps portable to other cloud providers, putting in the energy and time to make EKS is worth it.

___________________________________________________________________

I hope this overall analysis can help you identify choosing the right AWS Services for your workload deployments. And yes, if you find this informative and relevant please follow, like and share.

I will like to thanks Armon Dadgar, Anton Babenko, Edward Viaene, Nitin Shah, Sandip Das, Eric Guz, Mike Fitzpatric, Josh Lafave, Rahul Bansal, and Shyam Upadhyay for keeping me inspired through your guidance, knowledge sharing, training, speeches, webinars and words in keeping me motivated to pursue the research.